Today I added to the Git repo a Scala project that implements last post's linear regression model. I started learning the language from a very cool Youtube Scala tutorial early this week, and then decided to buy the reference ebook: Programming in Scala: A Comprehensive Step-by-Step Guide, Third Edition. I'm using the Eclipse-based editor on both the Windows and Ubuntu boxes and so far the experience has been very smooth. The language is more terse than C# while improving readability. Online resources are plentiful, thankfully. Looks like a keeper!

Why the change? It's good to move out of your comfort zone, now and then.

Learning about data science, machine learning, statistics and related stuff like mathematics.

Math formulas rendered with MathJax

Thursday, September 8, 2016

Thursday, September 1, 2016

Seasonal Time Series and Linear Regression

My last post explored an idea to automatically detect outliers on seasonal time series. These time series would have both weekly and daily cycles along with a small trend, such as the ones you will find on some data sources such as server performance meters or even in some business cycles. Data for these time series is typically sampled with a given frequency, like every five minutes or every hour. To detect the existence of unexpected data on such time series, the post followed an idea that instantiates a predictive model for every sampling period of the weekly period. The original idea suggested the use of an exponential moving average model that proved to work very well. I actually tested it with a four-year data set from one of our servers only to find that outlier detection was spot-on. Now, what else can we try with this idea?

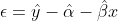

What if we used a different model, say a linear regression? In this case we would have a linear regression model for each one of the sampling periods. As you may recall, the exponential moving average model was implemented as an on-line process, being able to read and discard one sampled measure at a time. Can we do this with a linear regression model? Let's see how to estimate the parameters of a simple linear regression model:

and:

%5Cleft&space;(&space;y_%7Bi%7D-%5Cbar%7By%7D&space;%5Cright&space;)%7D%7B%5Csum_%7Bi=1%7D%5E%7Bn%7D%5Cleft&space;(&space;x_%7Bi%7D-%5Cbar%7Bx%7D&space;%5Cright&space;)%5E%7B2%7D%7D)

Looking at the formula to calculate the slope estimate, it might seem that the whole data set would be needed. After all, the above formula seems to imply that we must keep track of all the x and y values, so the whole data set would have to be kept in memory like a spreadsheet.

Fortunately this is not so. After some algebraic manipulations (see here), we can safely transform the equation to this

where the variables above are very simple averages:

This means that we only need a few variables to store all the necessary information to calculate the regression coefficients. Note that instead of the averages themselves, only the sums will be stored that, along with the sample count (n), will allow to perform the average calculation when needed. The stored variables are then:

For completeness, we will also store Syy (see below why). To determine if a given value is unexpected, we will use the same approach as before: first the expected value is calculated around which an acceptability range is calculated using the expected value's standard error or other similar measure. If the observed value falls outside this range, it is signaled as a potential outlier.

In the case of linear regression there is a standard approach to solve the outlier detection issue. For each estimated value , calculate its residual - the difference between the expected and the observed value - and divide it by the residuals standard error (RSE): the studentized residual in statistics lingo. The residual is pretty easy to calculate:

, calculate its residual - the difference between the expected and the observed value - and divide it by the residuals standard error (RSE): the studentized residual in statistics lingo. The residual is pretty easy to calculate:

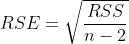

So how do we calculate RSE? Easy:

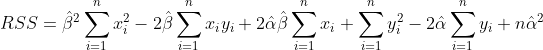

Where RSS, the residual sum of squares is defined as:

^{2})

We can also rearrange this formula using

so that we get

^{2})

Expanding the square, we get

or

which is already expressed in terms of the variables we store. Now, it is very easy to implement the new LinearRegressionModel class. The update method is simply:

Outlier detection is a bit more convoluted:

As usual, the code can be found on GitHub.

Equations in this post were created using a very nice online equation editor. Besides the Wikipedia article, I also used some knowledge from this wonderful book: An Introduction to Statistical Learning: with Applications in R (Springer Texts in Statistics).

What if we used a different model, say a linear regression? In this case we would have a linear regression model for each one of the sampling periods. As you may recall, the exponential moving average model was implemented as an on-line process, being able to read and discard one sampled measure at a time. Can we do this with a linear regression model? Let's see how to estimate the parameters of a simple linear regression model:

and:

Looking at the formula to calculate the slope estimate, it might seem that the whole data set would be needed. After all, the above formula seems to imply that we must keep track of all the x and y values, so the whole data set would have to be kept in memory like a spreadsheet.

Fortunately this is not so. After some algebraic manipulations (see here), we can safely transform the equation to this

where the variables above are very simple averages:

This means that we only need a few variables to store all the necessary information to calculate the regression coefficients. Note that instead of the averages themselves, only the sums will be stored that, along with the sample count (n), will allow to perform the average calculation when needed. The stored variables are then:

For completeness, we will also store Syy (see below why). To determine if a given value is unexpected, we will use the same approach as before: first the expected value is calculated around which an acceptability range is calculated using the expected value's standard error or other similar measure. If the observed value falls outside this range, it is signaled as a potential outlier.

In the case of linear regression there is a standard approach to solve the outlier detection issue. For each estimated value

So how do we calculate RSE? Easy:

Where RSS, the residual sum of squares is defined as:

We can also rearrange this formula using

so that we get

Expanding the square, we get

or

which is already expressed in terms of the variables we store. Now, it is very easy to implement the new LinearRegressionModel class. The update method is simply:

Outlier detection is a bit more convoluted:

As usual, the code can be found on GitHub.

Equations in this post were created using a very nice online equation editor. Besides the Wikipedia article, I also used some knowledge from this wonderful book: An Introduction to Statistical Learning: with Applications in R (Springer Texts in Statistics).

Friday, August 26, 2016

Seasonal Time Series Outlier Detection

First, let's start with this post's inspiration, an excellent idea that was brought to my attention by the weekly O'Reilly Data Newsletter: Data Science tricks: Simple anomaly detection for metrics with a weekly pattern, by Ilias Flaounas. Instead of using one model for the whole time series, the author proposes the creation of multiple models, one for each seasonal measurement. For instance, if every 5 minutes you are sampling a data source that has both a daily and a weekly pattern, you would create 12 * 24 * 7 = 2016 models (there are 12 five-minute intervals in one hour). Other sources, like sales data, might require larger sampling periods and a smaller number of models, e.g. an hourly sampled source would require 168 models for a daily and weekly seasonality.

Instead of using the article's exact formulas, I found an article named "Incremental calculation of weighted mean and variance" by Tony Finch that, besides the interesting read for a math nerd like me, describes how to perform the online calculation of both average and variance of the time series value. By online, I mean that you need not have all the data set in memory in order to calculate the values of interest, but you can read them one by one from a stream, discard the old ones and still be able to get the correct values. This not only saves memory but may also improve performance. The price you pay for this is a small amount of state that must be somehow persisted.

Data science is about data, so we need some of it to show how this anomaly detection method works. For this purpose, I will use simulated cyclical data using a Python script. Each data point corresponds to a five-minute interval sample as above, and I am going to pretend that all days are equal and there is a very small upwards trend. The data is generated with a simple sine function with some random noise added. Later I will manually add some extreme values and check if the code detects them as abnormal. The Python script I used to generate the simulated data is the following:

This script generates data for approximately four years (four 52 week periods). To simulate random variations, the script adds a uniform random value to each generated point. You can either run the code or use the "server-log.csv" file on the git repo.

I implemented the detection code using a small console C# project that reads the whole CSV file and tries to detect any possible outliers. The weekly model is represented by the WeeklyLogModel class that contains an array of 2016 ExponentialMovingModel objects.

The first 32 weeks are used to train the model and the remaining ones are evaluated for outliers. I got to this figure by trial-and-error: too small and you will get too many false positives. Also, I tuned the other parameters as 0.1 for the "memory" parameter and 3.5 for the detection "radius". This parameter is a distance from the model mean, measured in standard deviations, within which acceptable or reasonable values must lie. Outside of this radius, values are considered to be potential outliers. Smaller values will produce more false positives while larger ones may lead to true positives going unreported. This is definitely a parameter to tweak according to your own series.

Running the code as it is shows that the original simulated data only has one false positive at period number 65797. The value you would expect there would be roughly 1226.52 + 3.2 * 7.77 = 1251.38, quite close to the observed 1255.28. All other 419327 log records were considered "normal" or "acceptable".

Now let's try a real outlier. I planted one on a copy of the original file named "server-log-bad.csv" (also on the git repo) at observation number 419000. Just change the file name on the C# project and run it again.

Another approach to this idea can be found here: A simple approach to anomaly detection in periodic big data streams

You can find all the source code for this post on GitHub.

Instead of using the article's exact formulas, I found an article named "Incremental calculation of weighted mean and variance" by Tony Finch that, besides the interesting read for a math nerd like me, describes how to perform the online calculation of both average and variance of the time series value. By online, I mean that you need not have all the data set in memory in order to calculate the values of interest, but you can read them one by one from a stream, discard the old ones and still be able to get the correct values. This not only saves memory but may also improve performance. The price you pay for this is a small amount of state that must be somehow persisted.

Data science is about data, so we need some of it to show how this anomaly detection method works. For this purpose, I will use simulated cyclical data using a Python script. Each data point corresponds to a five-minute interval sample as above, and I am going to pretend that all days are equal and there is a very small upwards trend. The data is generated with a simple sine function with some random noise added. Later I will manually add some extreme values and check if the code detects them as abnormal. The Python script I used to generate the simulated data is the following:

This script generates data for approximately four years (four 52 week periods). To simulate random variations, the script adds a uniform random value to each generated point. You can either run the code or use the "server-log.csv" file on the git repo.

I implemented the detection code using a small console C# project that reads the whole CSV file and tries to detect any possible outliers. The weekly model is represented by the WeeklyLogModel class that contains an array of 2016 ExponentialMovingModel objects.

The first 32 weeks are used to train the model and the remaining ones are evaluated for outliers. I got to this figure by trial-and-error: too small and you will get too many false positives. Also, I tuned the other parameters as 0.1 for the "memory" parameter and 3.5 for the detection "radius". This parameter is a distance from the model mean, measured in standard deviations, within which acceptable or reasonable values must lie. Outside of this radius, values are considered to be potential outliers. Smaller values will produce more false positives while larger ones may lead to true positives going unreported. This is definitely a parameter to tweak according to your own series.

Running the code as it is shows that the original simulated data only has one false positive at period number 65797. The value you would expect there would be roughly 1226.52 + 3.2 * 7.77 = 1251.38, quite close to the observed 1255.28. All other 419327 log records were considered "normal" or "acceptable".

Now let's try a real outlier. I planted one on a copy of the original file named "server-log-bad.csv" (also on the git repo) at observation number 419000. Just change the file name on the C# project and run it again.

Another approach to this idea can be found here: A simple approach to anomaly detection in periodic big data streams

You can find all the source code for this post on GitHub.

Subscribe to:

Comments (Atom)